I've been posting up a short recording of a swelling storm labeled "Thunderstorm Synthesis." What this actually is, and how it's created is still a little convoluted, so I think it's time I gave an explanation.

Here's the description I've posted on SoundCloud:

The genesis of this track is an attempt to synthesize and sync a realistic sounding thunderstorm to picture. This recording consists of 4 samples of rain, and another 3 samples of rain+thunder that I recorded one afternoon.

Equipment used was the inbuilt mics on a Roland R-26, and a Sennheiser ME66 into a Sound Devices 702. The clips were recorded at 96kHz/24-bit, and they were processed at 48kHz/24-bit.

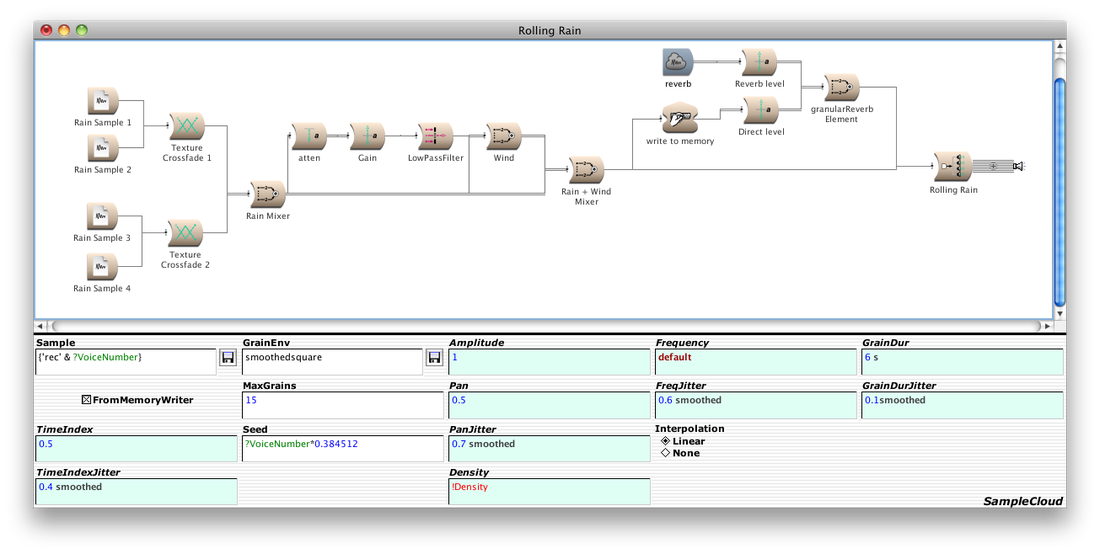

For processing, I put the samples into Kyma, and crossfaded for texture.

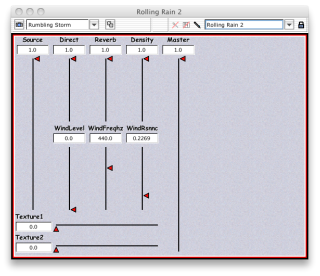

The howling wind sound is an analog-style low pass filter's frequency, level, and resonance being controlled by a Wacom Intuos4 pen/tablet.

The rain slowly swells, which was done by changing parameters of a granular reverb.

The thunder was also controlled by the Wacom tablet, with X, Y, and Z (pressure) dimensions mapped to making the thunder swell in level, density, and texture.

This could have been output in surround, but I don't have that many monitors ;). This style of "rain-synthesis" can also go on indefinitely.

Let me be clear and admit that saying "synthesis" doesn't mean that what you're hearing is entirely synthetic. As mentioned above, I did record samples of rain; about five minutes of a light shower, but I'm only using around 30 seconds of it.

The real goal is to turn that 30 seconds into a high resolution storm- that doesn't sound like it's been looped. This isn't a place where I want to bend a metal sheet or use a sizzling sausage to add tone to a film (those methods, mind you, are not beneath me).

Another part of this is to do an exercise in Kyma. If you're unfamiliar (most are- I ask everyone), Kyma is a niche sound design environment by Symbolic Sound Corporation.

The real goal is to turn that 30 seconds into a high resolution storm- that doesn't sound like it's been looped. This isn't a place where I want to bend a metal sheet or use a sizzling sausage to add tone to a film (those methods, mind you, are not beneath me).

Another part of this is to do an exercise in Kyma. If you're unfamiliar (most are- I ask everyone), Kyma is a niche sound design environment by Symbolic Sound Corporation.

Setting the Pieces

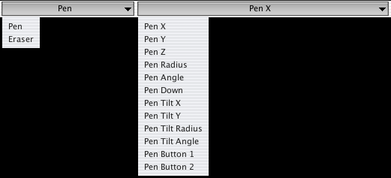

| There are other ways to achieve what I'm attempting, but for my purposes, I record four channels from Kyma into Pro Tools. I could go up to eight channels, but the hardware I have keeps me at four. Truthfully, I don't even have four monitors, but I tell Kyma the intended "virtual" speaker placement, and it will output a quadrophonic storm regardless. In the event that I need only a stereo signal, the ambient nature of this allows me to simply trash the rear channels, rather than downmixing. |

| After recording, the samples should be cut and faded into loops. The fades can be indiscernibly short, if desired. This isn't essential to making the process work, but does create smoother transitions, eliminating obtrusive "pops" when each file cycles back to its start. Since I'm dealing with such short clips, this is a good idea. |

The "wind" is simply a low-pass filter with four poles. The gain, cutoff frequency, and feedback can be changed as its source resonates through it. At the input of the filter are the mixed rain samples; since the frequency range of rainfall is so broad (almost white noise), it keeps a constant level around the cutoff frequency of the filter.

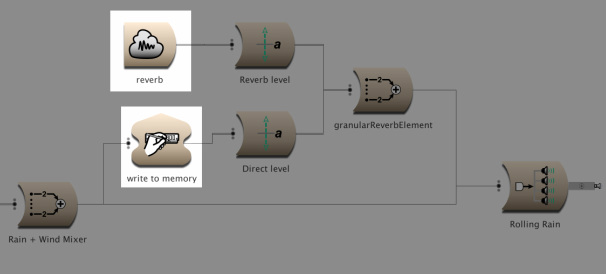

In this view, a Sound begins with its source on the left, and travels to the right.

In this view, a Sound begins with its source on the left, and travels to the right.

It's then written to and pulled from memory via a sample cloud. What this means is that it slices the audio into grains, or small samples, of a specified length, and applies a set number of other processes before playing the sample back, all while continuously reading what has just been written from the source itself. Yes, it seems this is a form of granular synthesis. In this case, however, the grains can be much longer than classic granular synthesis typically allows. The short grain duration was mainly due to a lack of processing horsepower, but that was some time ago. Instead of grains that are 1-100 milliseconds long, these samples can be as long as the user wants. In my case, I've set the grain duration to about 5 seconds.

If that's a little confusing, imagine this:

If that's a little confusing, imagine this:

| You have in front of you a book full of blank pages. A man approaches and begins writing. Page by page, he writes. He does not slow down, take breaks, look over what's written, or even look up at you- he only writes. A woman approaches and starts to read what the man has written. She deliberately stays three pages behind him. She reads consistently, and at the exact same pace as the man. Except that as she reads, she cuts the pages into small pieces of confetti and blows them into the air. Quite remarkably, it is a continuous process. Her eyes remain fixed on the pages, but her hands and lungs work to keep a constant, linear flow. You can vaguely see what's written on these small strips of paper, but you perceive it as a whole, more than any individual word. Her name is granular synthesis. You grow tired of this nonsense, and ask the woman to make the strips of paper bigger, so that you can read them as they blow by. She asks, "Which size?" You answer, "Umm, I don't know- six square inches." She immediately adjusts and cuts the pieces differently. They now float down in pieces of two by three inches. You can not only read words, but phrases, and even sentences. This is fascinating. Why is he still writing? Doesn't he notice her destroying his work? But again, your excitement fades. You want something more. You give the man sentimental pat on the back for holding up like a champ, then you ask the woman for more. You don't know how, but just more. She complies. With a free hand, she manages to make copies of every page and cut unique strips (still six square inches) from each one. Astonished, you give her further instruction to improvise. Again, she asks for boundaries, which you provide. |

Ok, enough of that. The sample cloud can read and duplicate audio, as well as apply a set amount of random, yet repeatable, deviation ("jitter") to each parameter. One of these parameters is the pan, which is one reason I chose to output in four discrete channels. Each new sample played from the cloud can be in a different place spatially. When many samples are triggered together, the effect is considerably immersive.

Watching Them Fall

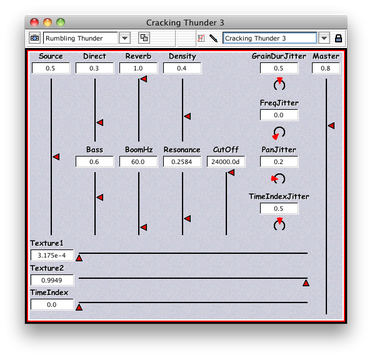

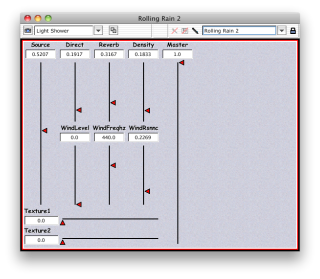

When the Sound is played, we see the Virtual Control Surface display all the specified hot values. By changing these, we can effectively determine the storm's intensity. Direct controls the amount of direct signal sent to the output, while Reverb attenuates the sample cloud. Density is an interesting variable, as it limits the number of grains being played. Here, a low Density value sounds mellow and sparse. When setting Density to maximum, the Sound begins to take on the natural chaos of a storm. When the other values are driven upward, the volume scales appropriately, so that the great amount of beating raindrops are multiplied in both power and number.

There are other values displayed here, like Source, which determines the input gain, before it reaches the Memory Writer. WindLevel, WindFreqhz, and WindResonance control the level, frequency, and feedback of a howling wind, respectively. I originally included this with the rain in order to record both simultaneously, but later separated the wind into another Sound.

There are other values displayed here, like Source, which determines the input gain, before it reaches the Memory Writer. WindLevel, WindFreqhz, and WindResonance control the level, frequency, and feedback of a howling wind, respectively. I originally included this with the rain in order to record both simultaneously, but later separated the wind into another Sound.

The patch for thunder utilizes sub-bass, which operates almost identically to the wind, but resonates at much lower frequencies. Remember that nonsense about random deviation? Here, anything with "Jitter" attached sets the fence for how much its affected function is spread.

So, if PanJitter is set to 1.0, the samples from the cloud can jump around anywhere from Left (0.0) to Right (1.0). If I change it to 0.5, then they can land anywhere from Center-Left (0.25) to Center-Right (0.75).

In addition, a low pass filter can be activated by bringing the cutoff down into an audible frequency range. It sits at 24,000 Hz in this image because it was initially implemented to cut out most of the rain in the recordings. After I obtained clean (rain-less) samples from The Hollywood Edge, I left the filter in, but changed its cutoff to the Nyquist frequency as a bypass (48 kHz / 2 = 24 kHz).

So, if PanJitter is set to 1.0, the samples from the cloud can jump around anywhere from Left (0.0) to Right (1.0). If I change it to 0.5, then they can land anywhere from Center-Left (0.25) to Center-Right (0.75).

In addition, a low pass filter can be activated by bringing the cutoff down into an audible frequency range. It sits at 24,000 Hz in this image because it was initially implemented to cut out most of the rain in the recordings. After I obtained clean (rain-less) samples from The Hollywood Edge, I left the filter in, but changed its cutoff to the Nyquist frequency as a bypass (48 kHz / 2 = 24 kHz).

The Tempest

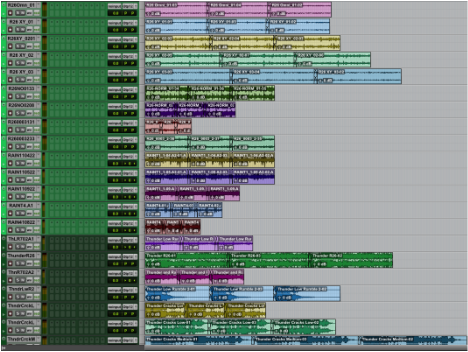

Finally, it's time to record. All that's necessary at this point is to arm the tracks in Pro Tools and begin "painting in" the rain.

I've recorded another example, to illustrate how simple it can be to work with multichannel audio in Reaper.

I've recorded another example, to illustrate how simple it can be to work with multichannel audio in Reaper.

After some quick editing and mixing, we are finished! For this, I used Avid's built-in compression plugin, as well as Waves' MaxxBass for enhancing the lows and low-mids of the thunder. Check out this screenshot of the edited and mixed product, then scroll down to hear how it all comes together. The exact example you're viewing is "Thunderstorm Synthesis.A2"

| Please note that SoundCloud compresses anything and everything it hosts, meaning a broadband ambient track like this won't sound great unless you hear the uncompressed original. To download the uncompressed file, click the "download" button in the top right corner of the SoundCloud player below. |

For more Signal Flow variations, click the numbers to the left of the image below.

| Lastly, you can download this encoded AC3 file to hear the quadrophonic storm on any consumer surround system. |

| ||

As I record new samples, create new examples, and discover new techniques, I'll update this post. If you'd like to discuss this or have comments, corrections, etc., leave a comment below or send email to [email protected].

RSS Feed

RSS Feed